The most common definition of reproducibility (and replication) was first noted by Claerbout and Karrenbach in 1992 Claerbout & Karrenbach, 1992 and has been used in computational science literature since then. Another popular definition has been introduced in 2013 by the Association for Computing Machinery (ACM) Ivie & Thain, 2018, which swapped the meaning of the terms ‘reproducible’ and ‘replicable’ compared to Claerbout and Karrenbach.

The following table contrasts both definitions Heroux et al., 2018.

| Term | Claerbout & Karrenbach | ACM |

|---|---|---|

| Reproducible | Authors provide all the necessary data and the computer codes to run the analysis again, re-creating the results. | (Different team, different experimental setup.) The measurement can be obtained with stated precision by a different team, a different measuring system, in a different location on multiple trials. For computational experiments, this means that an independent group can obtain the same result using artifacts which they develop completely independently. |

| Replicable | A study that arrives at the same scientific findings as another study, collecting new data (possibly with different methods) and completing new analyses. | (Different team, same experimental setup.) The measurement can be obtained with stated precision by a different team using the same measurement procedure, the same measuring system, under the same operating conditions, in the same or a different location on multiple trials. For computational experiments, this means that an independent group can obtain the same result using the author’s artifacts. |

Barba (2018) Barba, 2018 conducted a detailed literature review on the usage of reproducible/replicable covering several disciplines. Most papers and disciplines use the terminology as defined by Claerbout and Karrenbach, whereas microbiology, immunology and computer science tend to follow the ACM use of reproducibility and replication. In political science and economics literature, both terms are used interchangeably.

In addition to these high level definitions of reproducibility, some authors provide more detailed distinctions. Victoria Stodden Stodden, 2014, a prominent scholar on this topic, has for example identified the following further distinctions:

Computational reproducibility: When detailed information is provided about code, software, hardware and implementation details.

Empirical reproducibility: When detailed information is provided about non-computational empirical scientific experiments and observations. In practice, this is enabled by making the data and details of how it was collected freely available.

Statistical reproducibility: When detailed information is provided, for example, about the choice of statistical tests, model parameters, and threshold values. This mostly relates to pre-registration of study design to prevent p-value hacking and other manipulations.

Table of Definitions for Reproducibility¶

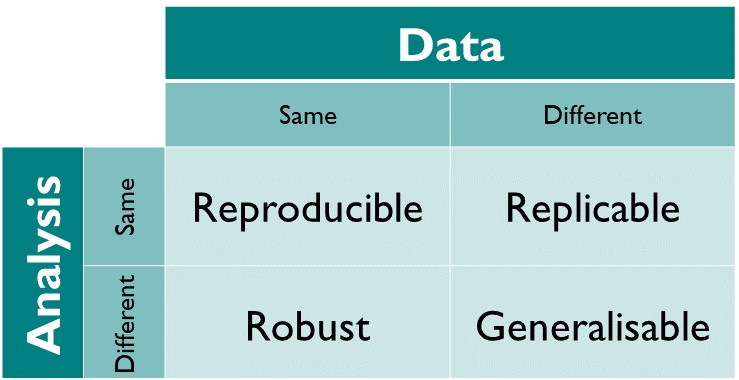

At The Turing Way, we define reproducible research as work that can be independently recreated from the same data and the same code that the original team used. Reproducible is distinct from replicable, robust and generalisable as described in the figure below.

Figure 1:How the Turing Way defines reproducible research

The different dimensions of reproducible research described in the matrix above have the following definitions:

- Reproducible: A result is reproducible when the same analysis steps performed on the same dataset consistently produces the same answer.

- Replicable: A result is replicable when the same analysis performed on different datasets produces qualitatively similar answers.

- Robust: A result is robust when the same dataset is subjected to different analysis workflows to answer the same research question (for example one pipeline written in R and another written in Python) and a qualitatively similar or identical answer is produced. Robust results show that the work is not dependent on the specificities of the programming language chosen to perform the analysis.

- Generalisable: Combining replicable and robust findings allow us to form generalisable results. Note that running an analysis on a different software implementation and with a different dataset does not provide generalised results. There will be many more steps to know how well the work applies to all the different aspects of the research question. Generalisation is an important step towards understanding that the result is not dependent on a particular dataset nor a particular version of the analysis pipeline.

More information on these definitions can be found in “Reproducibility vs. Replicability: A Brief History of a Confused Terminology” by Hans E. Plesser Plesser, 2018.

Figure 2:The Turing Way project illustration by Scriberia. Used under a CC-BY 4.0 licence. DOI: The Turing Way Community & Scriberia (2024).

Reproducible But Not Open¶

The Turing Way recognises that some research will use sensitive data that cannot be shared and this handbook will provide guides on how your research can be reproducible without all parts necessarily being open.

- Claerbout, J. F., & Karrenbach, M. (1992). Electronic documents give reproducible research a new meaning. In SEG Technical Program Expanded Abstracts. https://doi.org/10.1190/1.1822162

- Ivie, P., & Thain, D. (2018). Reproducibility in Scientific Computing. ACM Comput. Surv., 51(3), 1–36. 10.1145/3186266

- Heroux, M. A., Barba, L., Parashar, M., Stodden, V., & Taufer, M. (2018). Toward a Compatible Reproducibility Taxonomy for Computational and Computing Sciences. OSTI.GOV Collections. 10.2172/1481626

- Barba, L. A. (2018). Terminologies for Reproducible Research. arXiv. https://arxiv.org/abs/1802.03311v1

- Stodden, V. (2014). Edge.org. https://www.edge.org/response-detail/25340

- Plesser, H. E. (2018). Reproducibility vs. Replicability: A Brief History of a Confused Terminology. Front. Neuroinf., 11. 10.3389/fninf.2017.00076

- The Turing Way Community, & Scriberia. (2024). Illustrations from The Turing Way: Shared under CC-BY 4.0 for reuse. Zenodo. 10.5281/ZENODO.3332807